June 21st, 2021 • Erika Tyagi, Poornima Rajeshwar, and Liz DeWolf

FINAL UPDATE: Data Reporting & Quality Scorecard, Round 4

June marks the fourth month since we’ve started assessing each of the 53 state and federal carceral agencies on the transparency and quality of their COVID-19 data reporting. Since March, we’ve been assigning scores to these agencies based on the granularity of the COVID-19 variables they report, as well as the quality of those data. Each score translates to a letter grade.

This month, as with the two previous scoring rounds, more than 80% of agencies received an F. Click here for the raw scorecard data for all 53 agencies.

After four rounds, we have decided to discontinue the monthly assessments of agency dashboards until further notice. Fortunately, COVID-19 outbreaks in prisons appear to have slowed, making it less likely that agencies will make changes to what they report and how they report it on their dashboards. Though we have recently observed some changes in reporting practices, as noted below, overall there has been little variation over the previous two months.

Scoring these agencies has allowed us, and advocates across the country, to better understand irregularities in reporting across agencies, and thus to draw attention to a pervasive lack of transparency across carceral systems in the U.S. Through email, social media, formal testimony, and other forums, we’ve seen advocates cite our scorecards in calls for greater accountability in data reporting by carceral agencies. We hope our scores will continue to be used to push agencies to take greater responsibility for publicly communicating information about the wellbeing of those in their custody.

We will continue to monitor agency COVID-19 dashboards as part of our regular data collection efforts, and if we notice significant changes in how agencies report this information, we will update the scorecards accordingly. The scorecards will remain on the state, BOP, and ICE pages—along with the date of last update—and will continue to provide useful context for interpreting the COVID-19 data that we collect.

Key changes this round:

All but one letter grade remained the same from May to June. As of June 15th, the California Department of Corrections and Rehabilitation no longer reports new data on cumulative cases, active cases, or deaths among its staff. The agency announced this decision on its dashboard, citing as the reason the lifting of COVID-19 executive orders in California. As a result of this change in reporting, the agency lost six points, dropping its letter grade from a B to a D.

While no other letter grades changed, seven agencies saw their numeric scores change. The Maryland Department of Public Safety and Correctional Services and the South Carolina Department of Corrections each gained points for beginning to report facility-level vaccination data for incarcerated people. However, due to limited reporting across other areas, both agencies keep their prior grades (a D and an F). The New York State Department of Corrections and Community Supervision gained two points for beginning to report the total number of COVID-19 tests administered to incarcerated people; the agency previously only reported the number of tests up to two tests per person, a number much less useful than the true total.

The Minnesota Department of Corrections lost a point for changing the way it reports the number of people currently incarcerated, from by facility to systemwide, though its grade remains a D. The correctional agencies in New Jersey, Rhode Island, and Utah each lost two points for reducing the frequency of updating their dashboards. Because these three agencies had already received failing grades, their letter grades were not affected by these changes.

About the Metrics

Data Reporting

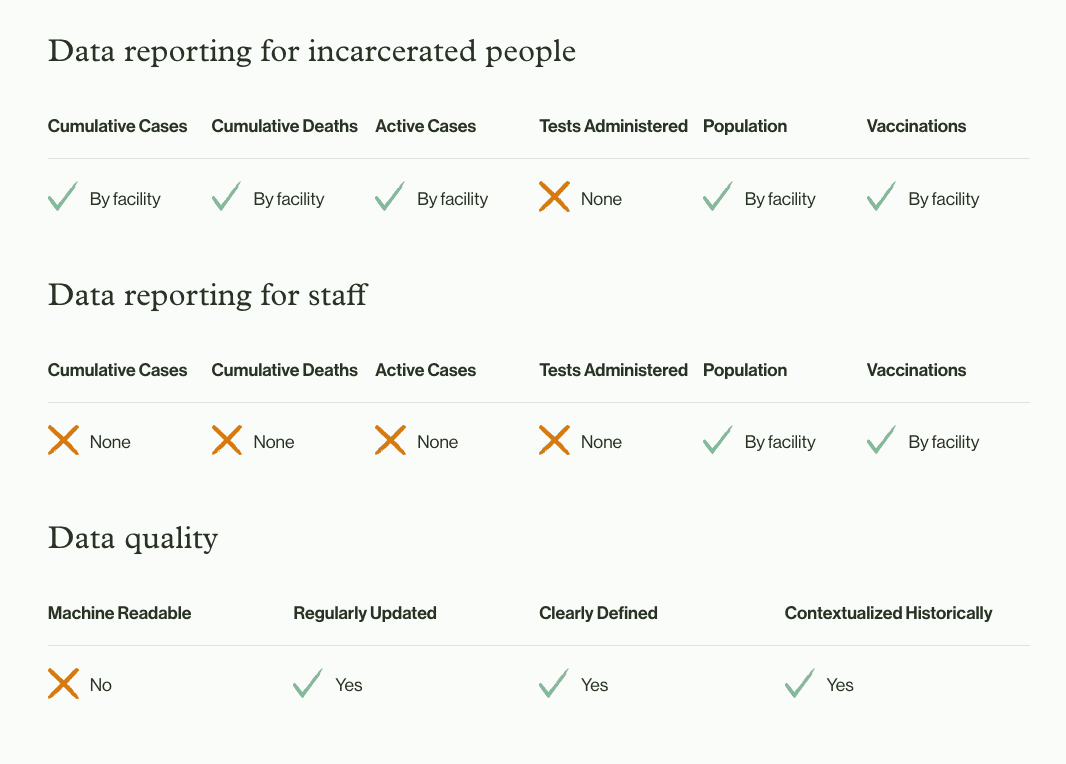

Our metrics for data reporting are tied to the 12 key variables we aim to collect from each jurisdiction. Out of these, six relate to incarcerated people and six to correctional staff. We have previously outlined why, at a minimum, all correctional agencies should report COVID-19 cases, deaths, and tests for incarcerated people and staff, and also why the reporting of real-time facility-level population data is essential. Knowing how many people are incarcerated and work at each facility puts the numbers of total cases, deaths, and tests in context. Finally, knowing the number of people who have been vaccinated is critical to understanding how the pandemic is being managed behind bars. No agency, with the exception of the West Virginia Division of Corrections and Rehabilitation, reports all 12 variables, and even this agency fails to report some variables at the facility level.

To assign scores for data reporting, we first assessed whether an agency reports each variable at all, and then whether it reports the variable in statewide aggregates or at the facility level. The scores allocated to these variables ranged from 0-2: 0 points if the variable is not reported, 1 point if the agency only reports statewide aggregates, and 2 points if the agency provides facility-level data for that variable.

Data Reporting Metrics:

- Cumulative cases: The agency reports the number of incarcerated people/staff who have ever tested positive for COVID-19. (Note: Some agencies remove cases from their total number of cases when people are released, which means their numbers are not true cumulative case counts. These agencies do not receive points for the metric, but we still report these data because they are the only data available.)

- Cumulative deaths: The agency reports the total number of incarcerated people/staff who have died with or from COVID-19. (Note: Some agencies do not include people who were positive for COVID-19 but were found to have died of another cause. We believe that agencies should include all people who had COVID-19 at the time of their death and note whether infection was indicated as the direct cause of their death.)

- Cumulative tests: The agency reports the total number of tests performed on incarcerated individuals and/or the total number performed on staff throughout the pandemic. (Note: While a few agencies report the number of people tested, agencies only receive points for reporting the number of tests administered. Monitoring for COVID-19 requires regular testing and reporting only the number of people tested obscures the regularity of testing.)

- Active cases: The agency reports the total number of incarcerated individuals/staff who have an active COVID-19 infection and have not been deemed recovered.

- Population: The agency reports the total number of incarcerated individuals/staff within a particular facility. (Note: As with all other metrics, agencies only receive points for including total population on their COVID-19 dashboards, not for reporting population elsewhere on their website.)

- Vaccinations: The agency reports the number of incarcerated people/staff who have received at least one dose of the vaccine, the number who have completed their vaccination schedule, and/or the number of vaccine doses administered. (Note: While we assigned points for multiple types of vaccination variables, we urge agencies to report the number of people who have received doses, rather than the number of doses administered. Given that vaccines vary in the number of doses required, it is not possible to discern how many people have been vaccinated when agencies only report the number of doses or do not specify the definition of its vaccination variable. Agencies that do not clearly define which vaccine variable they are reporting lost points for the “clearly defined” metric.

Data Quality

The data quality section of the scorecard consists of four metrics related to the manner in which agencies report the ten variables mentioned above. We assessed agencies on whether or not their data are presented in a format that can be easily read by computer software, whether they report data on a regular basis (i.e., at least weekly), whether they clearly define the variables they report, and whether they display any historical data for at least one of these variables. Although machine-readability may only be important to a particular set of data users, it is a critical feature of functional dashboards that enable researchers to collect and compare data efficiently.

Each data quality metric was assessed on a binary metric: 2 points were awarded for ‘Yes’ and 0 points for ‘No’. We awarded 2 points for ‘Yes’ rather than 1 so that the data quality metrics were weighted equally to the data reporting metrics.

Data Quality Metrics

- Machine readable: Data are presented in API, json, csv, or xml formats. Static images, pdfs, and html formats are not considered machine readable.

- Regularly updated: Data are updated at least once per week, with a visible timestamp.

- Clearly defined: Variable definitions are visible on the agency website (e.g., in a data dictionary or table footnotes).

- Contextualized historically: Historical data for at least one of the key variables are displayed on the agency website.

There are several nuanced issues with data quality that were not captured by the above metrics. For example, we have observed unexplained fluctuations in the total number of COVID-19 tests and deaths reported by the Pennsylvania Department of Corrections. In response to inquiries about the inconsistencies, the agency took its dashboard offline in late January to make adjustments and only recently reinstated it. In the intervening months, the PA DOC lost points for the data it was missing as of the scoring date, but we did not alter grades for changes in reporting.

A different but related issue exists with the data reported by the correctional departments in Florida, Arkansas, and Wyoming. Over the course of the pandemic, the agencies have gradually reduced the granularity of data included on their dashboards, becoming less transparent over time. The DOCs only earn points for the variables they report at the time we assess them, regardless of what they have reported in the past.

Where such issues exist, raising specific concerns about data transparency, we have noted and briefly explained each issue that we have observed on each state’s page. While not comprehensive, these notes provide important context about agencies’ reporting practices.

Assigning Letter Grades

We assigned standard letter grades to each agency based on the percentage of points earned out of a maximum total of 32. The letter grades are associated with score ranges as follows:

A: 29-32

B: 26-28

C: 23-25

D: 20-22

F: <19

Please let us know if you use this scorecard as a tool to advocate for better data transparency and quality in your state.

Carceral Agency Scores

| Carceral Agency | Overall | Data Quality | Reporting for Incarcerated People | Reporting for Staff |

|---|---|---|---|---|

| BOP | F(19 / 32) | 4 / 8 | 7 / 12 | 8 / 12 |

| ICE | F(10 / 32) | 2 / 8 | 8 / 12 | 0 / 12 |

| Alabama | D(20 / 32) | 4 / 8 | 8 / 12 | 8 / 12 |

| Alaska | F(9 / 32) | 4 / 8 | 5 / 12 | 0 / 12 |

| Arizona | F(10 / 32) | 2 / 8 | 7 / 12 | 1 / 12 |

| Arkansas | F(7 / 32) | 4 / 8 | 2 / 12 | 1 / 12 |

| California | D(20 / 32) | 6 / 8 | 10 / 12 | 4 / 12 |

| Colorado | F(16 / 32) | 4 / 8 | 9 / 12 | 3 / 12 |

| Connecticut | F(9 / 32) | 2 / 8 | 5 / 12 | 2 / 12 |

| Delaware | F(13 / 32) | 2 / 8 | 7 / 12 | 4 / 12 |

| District of Columbia | F(10 / 32) | 6 / 8 | 2 / 12 | 2 / 12 |

| Florida | F(6 / 32) | 2 / 8 | 3 / 12 | 1 / 12 |

| Georgia | F(15 / 32) | 2 / 8 | 7 / 12 | 6 / 12 |

| Hawaii | F(12 / 32) | 2 / 8 | 8 / 12 | 2 / 12 |

| Idaho | F(14 / 32) | 4 / 8 | 6 / 12 | 4 / 12 |

| Illinois | F(12 / 32) | 0 / 8 | 6 / 12 | 6 / 12 |

| Indiana | D(20 / 32) | 4 / 8 | 8 / 12 | 8 / 12 |

| Iowa | F(18 / 32) | 4 / 8 | 8 / 12 | 6 / 12 |

| Kansas | F(18 / 32) | 2 / 8 | 8 / 12 | 8 / 12 |

| Kentucky | F(14 / 32) | 2 / 8 | 6 / 12 | 6 / 12 |

| Louisiana | F(16 / 32) | 2 / 8 | 8 / 12 | 6 / 12 |

| Maine | F(8 / 32) | 2 / 8 | 6 / 12 | 0 / 12 |

| Maryland | D(21 / 32) | 4 / 8 | 9 / 12 | 8 / 12 |

| Massachusetts | F(0 / 32) | 0 / 8 | 0 / 12 | 0 / 12 |

| Michigan | F(16 / 32) | 4 / 8 | 7 / 12 | 5 / 12 |

| Minnesota | D(21 / 32) | 6 / 8 | 11 / 12 | 4/ 12 |

| Mississippi | F(4 / 32) | 0 / 8 | 4 / 12 | 0 / 12 |

| Missouri | F(10 / 32) | 0 / 8 | 6 / 12 | 4 / 12 |

| Montana | F(8 / 32) | 2 / 8 | 3 / 12 | 3 / 12 |

| Nebraska | F(7 / 32) | 2 / 8 | 5 / 12 | 0 / 12 |

| Nevada | F(10 / 32) | 2 / 8 | 4 / 12 | 4 / 12 |

| New Hampshire | F(18 / 32) | 2 / 8 | 11 / 12 | 5/ 12 |

| New Jersey | F(8 / 32) | 0 / 8 | 5 / 12 | 3 / 12 |

| New Mexico | F(9 / 32) | 2 / 8 | 7 / 12 | 0 / 12 |

| New York | F(10 / 32) | 2 / 8 | 6 / 12 | 2 / 12 |

| North Carolina | F(19 / 32) | 8 / 8 | 10 / 12 | 1/ 12 |

| North Dakota | D(22 / 32) | 4 / 8 | 10 / 12 | 8/ 12 |

| Ohio | F(14 / 32) | 4 / 8 | 4 / 12 | 6 / 12 |

| Oklahoma | F(8 / 32) | 2 / 8 | 5 / 12 | 1 / 12 |

| Oregon | F(17 / 32) | 8 / 8 | 7 / 12 | 2 / 12 |

| Pennsylvania | C(24 / 32) | 6 / 8 | 12 / 12 | 6/ 12 |

| Rhode Island | F(10 / 32) | 2 / 8 | 4 / 12 | 4 / 12 |

| South Carolina | F(16 / 32) | 2 / 8 | 8 / 12 | 6 / 12 |

| South Dakota | F(14 / 32) | 2 / 8 | 6 / 12 | 6 / 12 |

| Tennessee | F(16 / 32) | 2 / 8 | 9 / 12 | 5 / 12 |

| Texas | F(14 / 32) | 6 / 8 | 5 / 12 | 3 / 12 |

| Utah | F(9 / 32) | 2 / 8 | 6 / 12 | 1 / 12 |

| Vermont | F(13 / 32) | 4 / 8 | 5 / 12 | 4 / 12 |

| Virginia | F(13 / 32) | 2 / 8 | 7 / 12 | 4 / 12 |

| Washington | F(13 / 32) | 2 / 8 | 6 / 12 | 5 / 12 |

| West Virginia | B(26 / 32) | 8 / 8 | 12 / 12 | 6/ 12 |

| Wisconsin | D(21 / 32) | 8 / 8 | 10 / 12 | 3/ 12 |

| Wyoming | F(7 / 32) | 2 / 8 | 3 / 12 | 2 / 12 |

next post

June 23rd, 2021 • Erika Tyagi and Liz DeWolf

The challenges of interpreting vaccination data reported by carceral agencies

Through our Data Reporting & Quality Scorecards, we’ve noted the chronic lack of transparency and generally poor data reporting from carceral agencies. This problem has only been exacerbated as agencies have refused to report the nuances of vaccine administration.