March 17th, 2021 • Liz DeWolf, Poornima Rajeshwar, and Erika Tyagi

Missing the Mark: Data Reporting & Quality Scorecard

The UCLA Law COVID Behind Bars Data Project has been tracking the coronavirus pandemic in prisons, jails, and other detention facilities across the country since March 2020, an effort made immeasurably harder by the lack of transparency and consistency in data reporting by carceral agencies.

Several times per week, our team of data scientists systematically collects self-reported data on the number of COVID-19 cases, deaths, and tests performed (among other variables) from 50 state correctional agencies, the District of Columbia’s Department of Corrections, the Federal Bureau of Prisons (BOP), U.S. Immigration and Customs Enforcement (ICE), and several county jail systems.

Our data come directly from official agency websites, but the quality of these dashboards and the frequency with which they are updated are widely variable. Carceral agencies have long been opaque. They resist basic transparency, and federal and state reporting mandates — to the extent they exist — are poorly enforced. Data is hard to access, infrequently collected, and released only after long delays. Unfortunately, these patterns have continued during the pandemic.

Inconsistencies in data reporting practices across agencies — including what variables are reported and how — make our efforts to collect and standardize COVID-19 data in the carceral context extremely challenging. Yet there is an urgent national need for the data we seek. Carceral facilities are hotspots for viral spread and people living inside are among the most vulnerable to infection and death. Tracking the virus in prisons, jails, and detention centers across the country is necessary to inform critical public health interventions like decarceration and vaccination.

Some of these inconsistencies produce gaps in our data. Certain agencies simply do not report key variables, such as cumulative COVID-19 cases or deaths. Others fail to report data on a regular basis.

Agencies also report data in formats that are hard to access or analyze. In some instances, they post updates in images or PDFs that are difficult for our web scrapers to process. As a result, we are sometimes forced to resort to painstaking manual data collection. In other cases, agencies do not clearly define the variables they report, and we have to make educated guesses as to how to interpret the data. In several instances, agencies have unaccountably altered the form in which the data is posted, necessitating the building of new scrapers in short order.

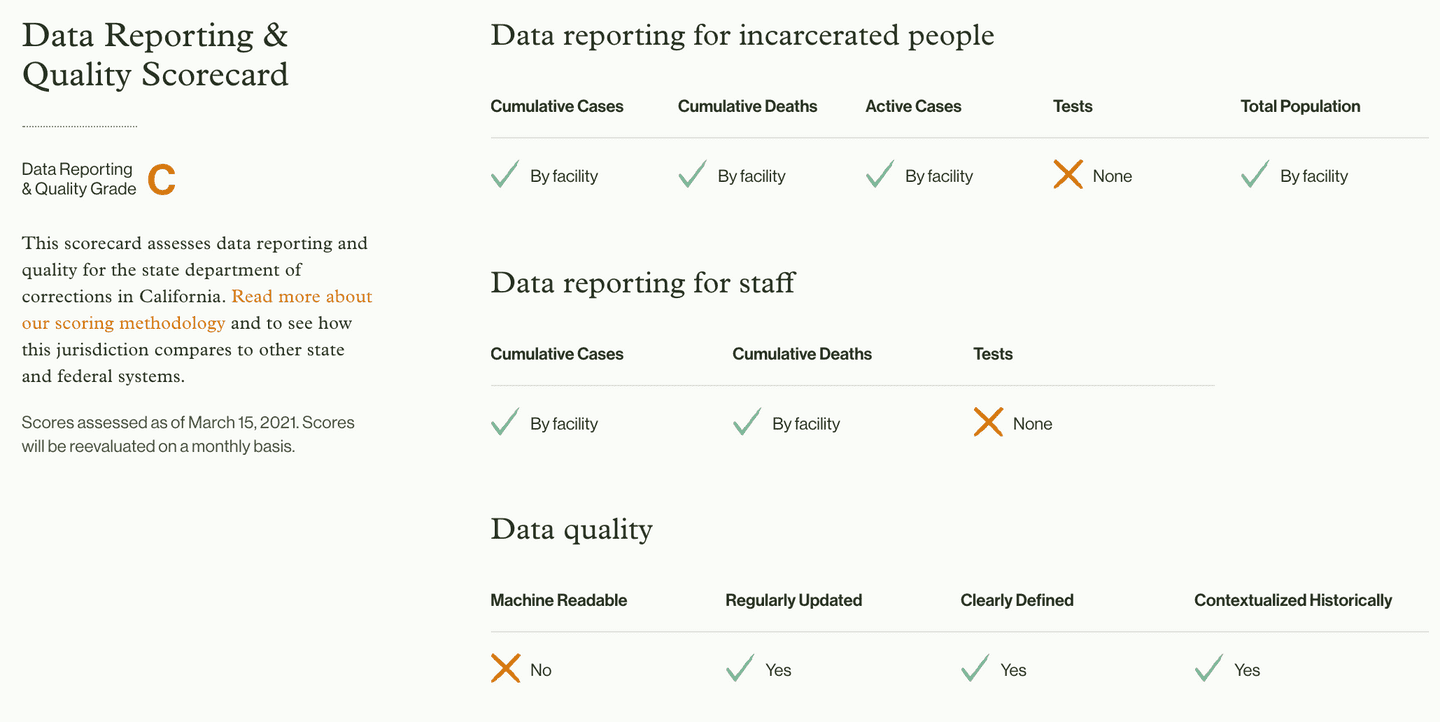

To demonstrate these inconsistencies in data reporting and quality, we have created a scorecard to assess each of the 53 major state and federal agencies according to a number of metrics related to what COVID-19 data they report (our data reporting metrics) and how they report those data (our data quality metrics).

We assigned our first round of scores and grades on March 15, 2021. Of the 53 systems assessed, the highest grade was a B for the West Virginia Division of Corrections and Rehabilitation. The state correctional agencies in California, Indiana, Minnesota, North Carolina, Oregon, and Wisconsin received a grade of C, and the agencies in Colorado, Maryland, Michigan, and North Dakota, as well as the BOP, received a D. The other 41 — more than 75% of agencies — failed.

The scorecard for each state agency is displayed on each state data page, and the BOP’s scorecard is on the federal data page. The scorecards for ICE will be displayed on a forthcoming page dedicated to immigration detention data. Click here for the raw scorecard data for all 53 agencies.

Importantly, the scores we have assigned each agency do not reflect our judgment on how each has managed and responded to COVID-19 outbreaks, nor on how reliably we believe the reported data correspond to true facts on the ground. They reflect only our judgments on how comprehensive the data they report are and on how well they present those data.

About the Metrics

Data Reporting

Our metrics for data reporting are tied to the eight key variables we aim to collect from each jurisdiction. Out of these, five relate to incarcerated people and three concern correctional staff. We have previously outlined why, at a minimum, all correctional agencies should report COVID-19 cases, deaths, and tests for incarcerated people and staff, and also explained why the reporting of real-time facility-level population data is essential. Knowing how many people are incarcerated at each facility is critical to put total cases, deaths, and tests in context. No agency, with the exception of the West Virginia Division of Corrections and Rehabilitation, reports all eight variables, and even this agency fails to report some variables at the facility level.

To assign scores for data reporting, we first assessed whether an agency reports each variable at all, and then whether it reports the variable in statewide aggregates or at the facility level. The scores allocated to these variables ranged from 0-2: 0 points if the variable is not reported, 1 point if the agency only reports statewide aggregates, and 2 points if the agency provides facility-level data for that variable.

Data Reporting Metrics — Incarcerated People:

- Cumulative cases: The agency reports the number of individuals who have ever tested positive for COVID-19 while incarcerated at a particular facility.

- Cumulative deaths: The agency reports the total number of individuals who have died with or from COVID-19 while incarcerated in a particular facility. (Note: Some agencies do not include people who were positive for COVID-19 but were found to have died of another cause. Our position is that agencies should include all people who had COVID-19 and died, and note whether infection was the cause of their death.)

- Cumulative tests: The agency reports the total number of tests performed on incarcerated individuals throughout the pandemic. (Note: While a few agencies report the number of people tested, agencies only receive points for reporting the number of tests administered. Monitoring for COVID-19 requires regular testing and reporting only the number of people tested obscures the regularity of testing.)

- Active cases: The agency reports the total number of individuals currently incarcerated at a particular facility who have an active infection of COVID-19 and have not been deemed recovered.

- Total population: The agency reports the total number of individuals incarcerated within a particular facility. (Note: As with all other metrics, agencies only receive points for including total population on their COVID-19 dashboards, not for reporting population elsewhere on their website.)

Data Reporting Metrics — Staff:

- Cumulative cases: The agency reports the total number of confirmed COVID-19 cases among staff at a particular facility.

- Cumulative deaths: The agency reports the total number of staff who have died with or from COVID-19 who worked in a particular facility.

- Cumulative tests: The agency reports the total number of tests performed on staff throughout the pandemic.

A note on vaccine data: An important variable that we did not include in our scorecard, but which we do collect where reported, is the number of incarcerated people and staff who have been vaccinated. A number of jurisdictions have not yet initiated vaccinations in their facilities, and we have therefore decided to delay assessing agencies for this metric until a critical mass of departments initiate vaccination.

Data Quality

The data quality section of the scorecard consists of four metrics related to the manner in which agencies report the eight variables mentioned above. We assessed agencies on whether or not their data are presented in a format that can be easily read by computer software, whether they report data on a regular basis (i.e., at least weekly), whether they clearly define the variables they report, and whether they display any historical trends for at least one of these variables. Although machine-readability may only be important to a particular set of data users, it is a critical feature of functional dashboards that enable researchers to collect and compare data efficiently.

Each data quality metric was assessed on a binary metric: 2 points were awarded for ‘Yes’ and 0 points for ‘No’. We awarded 2 points for ‘Yes’ rather than 1 so that the data quality metrics were weighted equally to the data reporting metrics.

Data Quality Metrics

- Machine readable: Data are presented in API, json, csv, or xml formats. Static images, pdfs, and html formats are not considered machine readable.

- Regularly updated: Data are updated at least once per week, with a visible timestamp.

- Clearly defined: Variable definitions are visible on the agency website (e.g., in a data dictionary or table footnotes).

- Contextualized historically: Historical trends for at least one of the key variables are displayed on the agency website.

There are several nuanced problems with data quality that were not captured by the above metrics. For example, we recently observed unexplained fluctuations in the total number of COVID-19 tests and deaths reported by the Pennsylvania Department of Corrections. In response to inquiries about the inconsistencies, the agency took its dashboard offline in late January to make adjustments and has not yet reinstated it. The PA DOC lost points for the data it is currently missing, but we did not alter grades for changes in reporting.

A different but related issue exists with the data reported by the correctional departments in Florida, Arkansas, and Wyoming — over the course of the pandemic, the agencies have gradually reduced the granularity of data included on their dashboards, becoming less transparent over time. Again, the DOCs lost points on our scorecard for not reporting key variables, but we did not alter grades for changes in transparency.

Where such issues exist, raising specific concerns about data transparency, we have noted and briefly explained each issue that we have observed on each state’s page. While not comprehensive, these notes provide important context about agencies’ reporting practices.

Assigning Letter Grades

We assigned standard letter grades to each agency based on the percentage of points earned out of a maximum total of 24. The letter grades are associated with score ranges as follows:

A: 22-24

B: 20-21

C: 17-19

D: 16

F: <15

We plan to reassess scores on a monthly basis. Please let us know if you use this scorecard as a tool to advocate for better data transparency and quality in your state.

Carceral Agency Scores

| Carceral Agency | Overall | Data Quality | Reporting for Incarcerated People | Reporting for Staff |

|---|---|---|---|---|

| BOP | F(11 / 24) | 4 / 8 | 5 / 10 | 2 / 6 |

| ICE | F(10 / 24) | 2 / 8 | 8 / 10 | 0 / 6 |

| Alabama | F(14 / 24) | 4 / 8 | 6 / 10 | 4 / 6 |

| Alaska | F(7 / 24) | 4 / 8 | 3 / 10 | 0 / 6 |

| Arizona | F(9 / 24) | 2 / 8 | 6 / 10 | 1 / 6 |

| Arkansas | F(7 / 24) | 4 / 8 | 3 / 10 | 0 / 6 |

| California | C(18 / 24) | 6 / 8 | 8 / 10 | 4 / 6 |

| Colorado | D(15 / 24) | 6 / 8 | 8 / 10 | 1 / 6 |

| Connecticut | F(5 / 24) | 2 / 8 | 3 / 10 | 0 / 6 |

| Delaware | F(6 / 24) | 0 / 8 | 5 / 10 | 1 / 6 |

| District of Columbia | F(10 / 24) | 6 / 8 | 2 / 10 | 2 / 6 |

| Florida | F(7 / 24) | 4 / 8 | 2 / 10 | 1 / 6 |

| Georgia | F(11 / 24) | 2 / 8 | 5 / 10 | 4 / 6 |

| Hawaii | F(12 / 24) | 2 / 8 | 8 / 10 | 2 / 6 |

| Idaho | F(10 / 24) | 4 / 8 | 4 / 10 | 2 / 6 |

| Illinois | F(10 / 24) | 0 / 8 | 6 / 10 | 4 / 6 |

| Indiana | C(18 / 24) | 4 / 8 | 8 / 10 | 6 / 6 |

| Iowa | F(14 / 24) | 4 / 8 | 6 / 10 | 4 / 6 |

| Kansas | F(12 / 24) | 2 / 8 | 6 / 10 | 4 / 6 |

| Kentucky | F(12 / 24) | 2 / 8 | 6 / 10 | 4 / 6 |

| Louisiana | F(14 / 24) | 2 / 8 | 8 / 10 | 4 / 6 |

| Maine | F(7 / 24) | 2 / 8 | 5 / 10 | 0 / 6 |

| Maryland | D(16 / 24) | 4 / 8 | 7 / 10 | 5 / 6 |

| Massachusetts | F(0 / 24) | 0 / 8 | 0 / 10 | 0 / 6 |

| Michigan | D(16 / 24) | 4 / 8 | 7 / 10 | 5 / 6 |

| Minnesota | C(18 / 24) | 6 / 8 | 10 / 10 | 2 / 6 |

| Mississippi | F(4 / 24) | 0 / 8 | 4 / 10 | 0 / 6 |

| Missouri | F(9 / 24) | 0 / 8 | 6 / 10 | 3 / 6 |

| Montana | F(8 / 24) | 2 / 8 | 3 / 10 | 3 / 6 |

| Nebraska | F(7 / 24) | 2 / 8 | 5 / 10 | 0 / 6 |

| Nevada | F(10 / 24) | 2 / 8 | 4 / 10 | 4 / 6 |

| New Hampshire | F(11 / 24) | 2 / 8 | 7 / 10 | 2 / 6 |

| New Jersey | F(8 / 24) | 2 / 8 | 4 / 10 | 2 / 6 |

| New Mexico | F(9 / 24) | 2 / 8 | 7 / 10 | 0 / 6 |

| New York | F(12 / 24) | 4 / 8 | 6 / 10 | 2 / 6 |

| North Carolina | C(17 / 24) | 8 / 8 | 9 / 10 | 0 / 6 |

| North Dakota | D(16 / 24) | 4 / 8 | 6 / 10 | 6 / 6 |

| Ohio | F(14 / 24) | 4 / 8 | 6 / 10 | 4 / 6 |

| Oklahoma | F(8 / 24) | 2 / 8 | 5 / 10 | 1 / 6 |

| Oregon | C(17 / 24) | 8 / 8 | 7 / 10 | 2 / 6 |

| Pennsylvania | F(8 / 24) | 2 / 8 | 4 / 10 | 2 / 6 |

| Rhode Island | F(12 / 24) | 4 / 8 | 4 / 10 | 4 / 6 |

| South Carolina | F(12 / 24) | 2 / 8 | 6 / 10 | 4 / 6 |

| South Dakota | F(12 / 24) | 2 / 8 | 6 / 10 | 4 / 6 |

| Tennessee | F(14 / 24) | 2 / 8 | 8 / 10 | 4 / 6 |

| Texas | F(14 / 24) | 6 / 8 | 5 / 10 | 3 / 6 |

| Utah | F(11 / 24) | 4 / 8 | 6 / 10 | 1 / 6 |

| Vermont | F(11 / 24) | 4 / 8 | 5 / 10 | 2 / 6 |

| Virginia | F(11 / 24) | 4 / 8 | 6 / 10 | 1 / 6 |

| Washington | F(11 / 24) | 2 / 8 | 5 / 10 | 4 / 6 |

| West Virginia | B(20 / 24) | 8 / 8 | 9 / 10 | 3 / 6 |

| Wisconsin | C(18 / 24) | 8 / 8 | 8 / 10 | 2 / 6 |

| Wyoming | F(5 / 24) | 2 / 8 | 3 / 10 | 0 / 6 |

Acknowledgements

Many thanks to Tom Meagher of the Marshall Project and Peter Wagner of Prison Policy Initiative for providing critical input as we developed our scorecard. Benjamin Nyblade, director of the Empirical Research Group at UCLA School of Law and an advisor for our project, also contributed thoughtful guidance on our methodology. We’d also like to thank the many participants of the 2020 MIT Policy Hackathon who, over one short weekend, took on the challenge of assessing agencies using our data and provided us with inspiration and helpful insights for the construction of our framework.

Participants of the 2020 MIT Policy Hackathon

- Shibu Antony

- Amaan Charaniya

- Lelia Hampton

- Amy Huynh

- Jeffery John

- Jong Min Jung

- Ekaterina Kostioukhina

- Tarun KS

- Akhil Kumar

- Katelyn Morrison

- Shourya Mitra Mustauphy

- Abhijit Nikhade

- Olivia Pfeiffer

- Ariana Pitcher

- Hassnain Qasim

- Ibrahim Rashid

- Ishita Rastogi

- Marley Rosario

- Maanas Sharma

- Maxim Sinelnikov

- Tishya Srivastava

- Faiz Syed

- James Tourkistas

- Anna Waterkotte

- Tiana Wong

- Marina Wyss

- Stephany YipChoy

next post

March 22nd, 2021 • Joshua Manson

“An Afterthought”: A Few of The Ways That The Prison Law Office Has Renewed Longstanding Battles For Prisoner Rights and Safety During the Pandemic

The Prison Law Office has adapted its longstanding battles against overcrowding, inadequate health care, and other dangerous conditions in jails and prisons during the COVID-19 pandemic.